0.1.0 - Linear Regression

Linear regression is one of the oldest and most fundamental forms of linear modelling, developed/discovered by none other than Carl Fredriech Gauss. Much of modern machine learning is just a beefed up version of linear regression so its worth understanding it well.

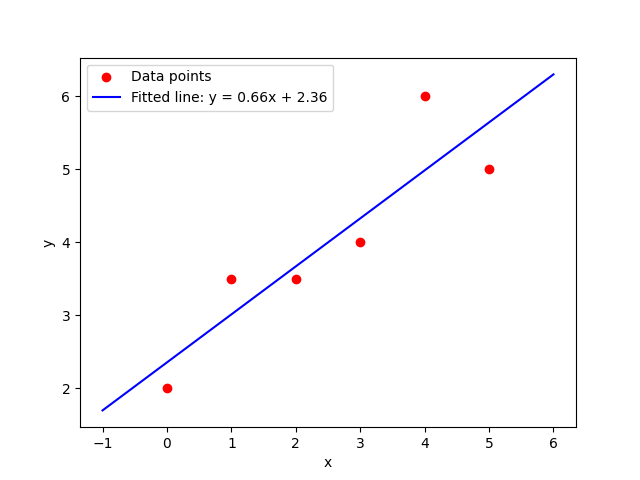

Linear regression is used to approximate the best solution to a system of equations that has no solution. When the system of equations has no solutions there is no hyperplane (a flat subspace of one dimension less than the space in which it resides) that all the data points lies on. Linear regression finds the best hyperplane that minimizes the error across all observations/data points.

Fig. 1: An example where you have 3 data points in R², there is no solution so we have to find the best approximate solution (which is a hyperplane in R¹ - a line).

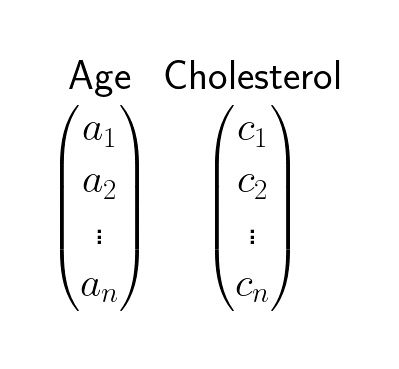

Here is an example of how to use linear regression. Assume you have data about age and cholesterol of patients:

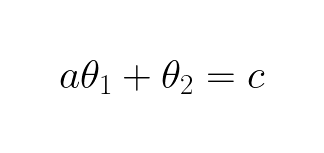

It could be interesting to see if there is a linear relationship between age and cholesterol. In other words we want to see if there is some number we can multiply with an age observation to get the cholesterol:

The linear model/function above tells us that newborn babies have 0 cholesterol, which is not true. To allow our model to have other values than 0 for cholesterol for babies with age 0 we add a bias term:

In general the bias term allows the model to intersect with the axes at different places than the origin. It gives the model a bigger solution space now, there are more “wiggle room” to approximate a solution. Notice the bias term can still be zero if we choose θ2 to be zero. Linear function with a bias term is called affine functions. The b is usually set to 1, that is because it simplifies the expression without reducing the solution space:

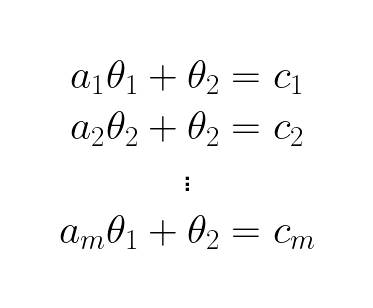

To find the optimal _θ_s/weights for our model/function we need to consider all the observations:

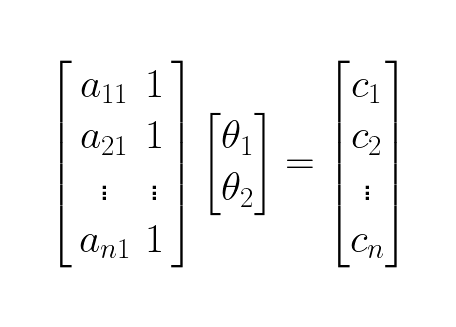

To make the system of equations suitable for efficient computation we write it in matrix form:

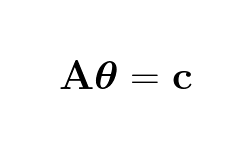

Which is the same as:

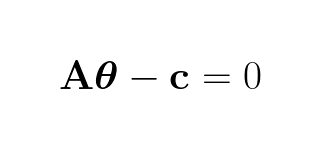

When there is a solution to the equations the error is 0:

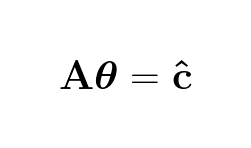

Since Aθ is a prediction of c we denote it:

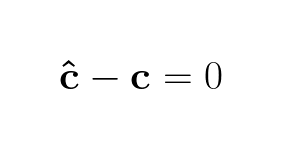

Whereby it follows that:

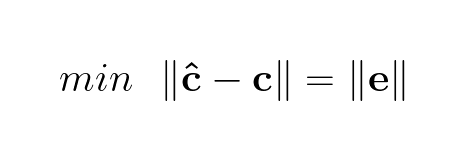

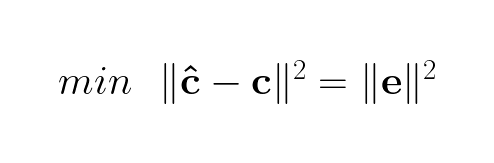

This is the expression for the error of the model’s approximation. In real world scenarios it is rarely the case that we find a solution where the error is 0, meaning ĉ, the estimates of cholesterol given age, does not equal c, the cholesterol values in the dataset. Therefore we need to approximate a solution where want to minimize the error as much as possible. Since original data points can fall on any side of the approximated hyperplane, the error can be negative or positive, so we want to minimize the absolute errors across all approximations of c.

Because we deal with matrices, we want to make our expressions as matrix friendly as possible. Turns out squaring the equation makes it much easier to deal with matrix-wise without affecting the solution:

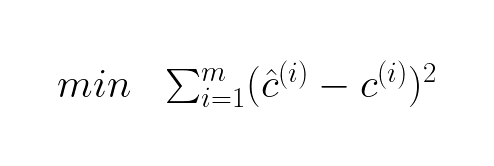

This can be written using the summation notation as:

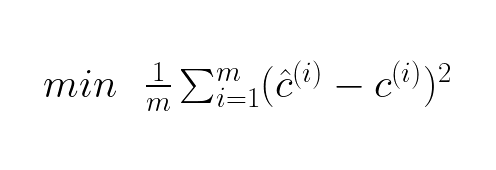

Since it would be more meaningful metric to see the average error for each prediction, we minimize the mean of the errors made:

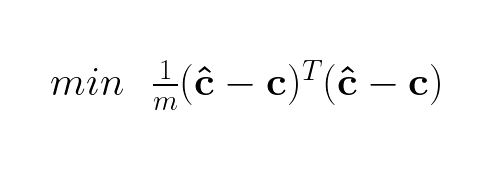

To write this in matrix form we notice that the summation block is the same as the dot product between the transposed error times the error:

If we substitute ĉ we get:

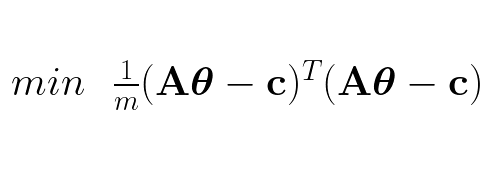

This is the function we want to minimize, the cost function. The convention is to call the cost function J:

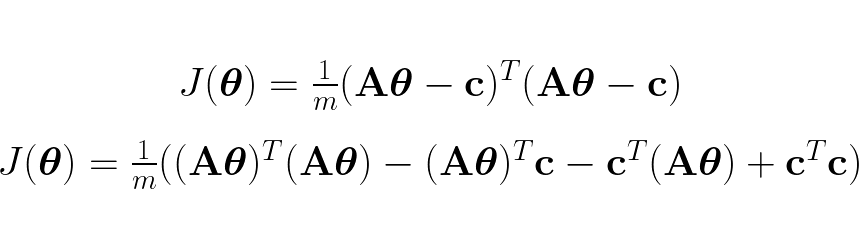

We know that when the derivative (single variable functions) or gradient (multivariable functions) is respectively zero or the zero-vector we find critical points (global/local minimum, global/local maximum, saddle points). In the case of the cost function for linear regression it can be showed that it is a convex function, this is done by seeing that the the Hessian matrix (second derivative for multivariable functions) of the cost function is positive semi-definite. Now that we know that, we prepare our cost function for derivation by expanding it:

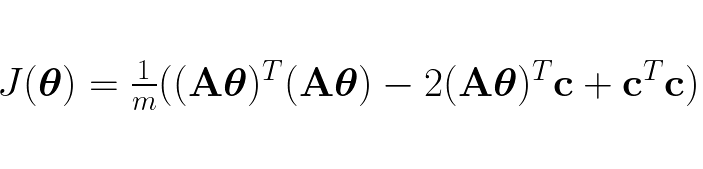

The two middle terms in the expanded cost function is the same dot product, each is just calculated in the reverse other of the other. Therefore we can “shrink” the cost function to:

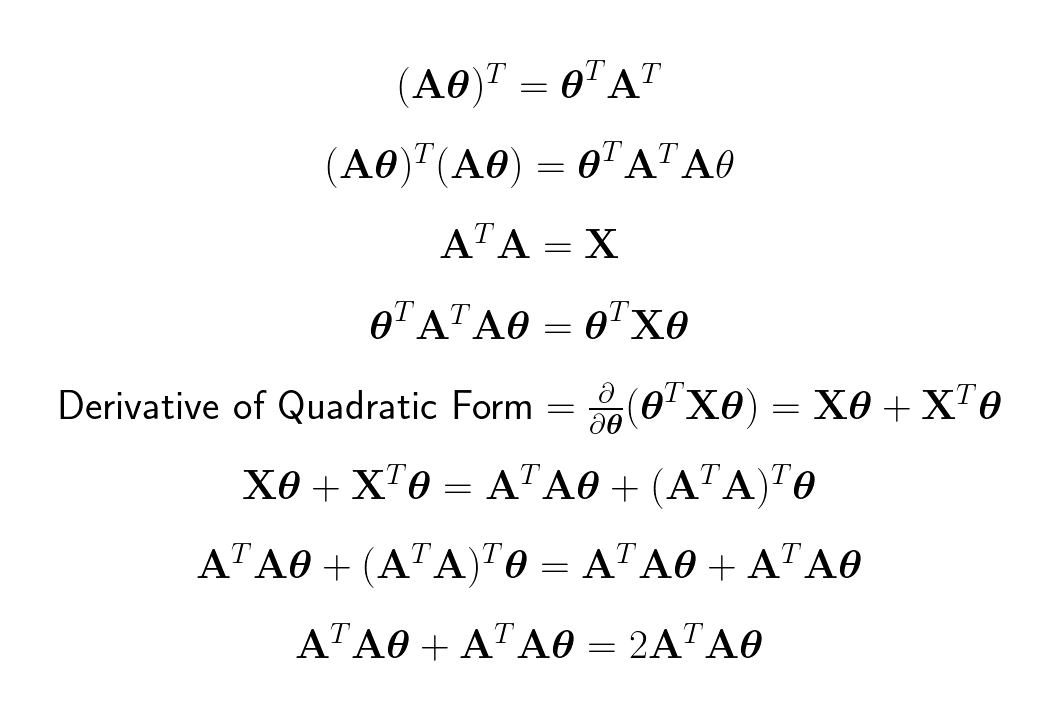

The cost function is now ready to be derived. The process is for the most part fairly simple, it just applying basic derivative formulas in calculus with one exception. When the first term is expanded we discover it is in a quadratic form, for which we can apply the quadratic form derivation rule in matrix calculus:

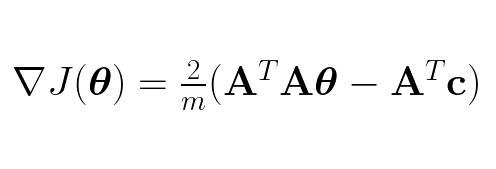

After derivating all the other terms we end up with the gradient :

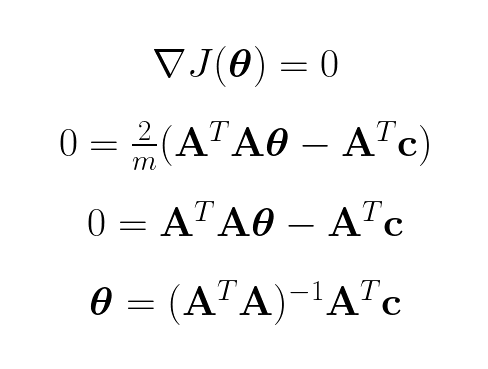

To find the global minimum of the cost function we set the gradient to zero and calculate the the _θ_s:

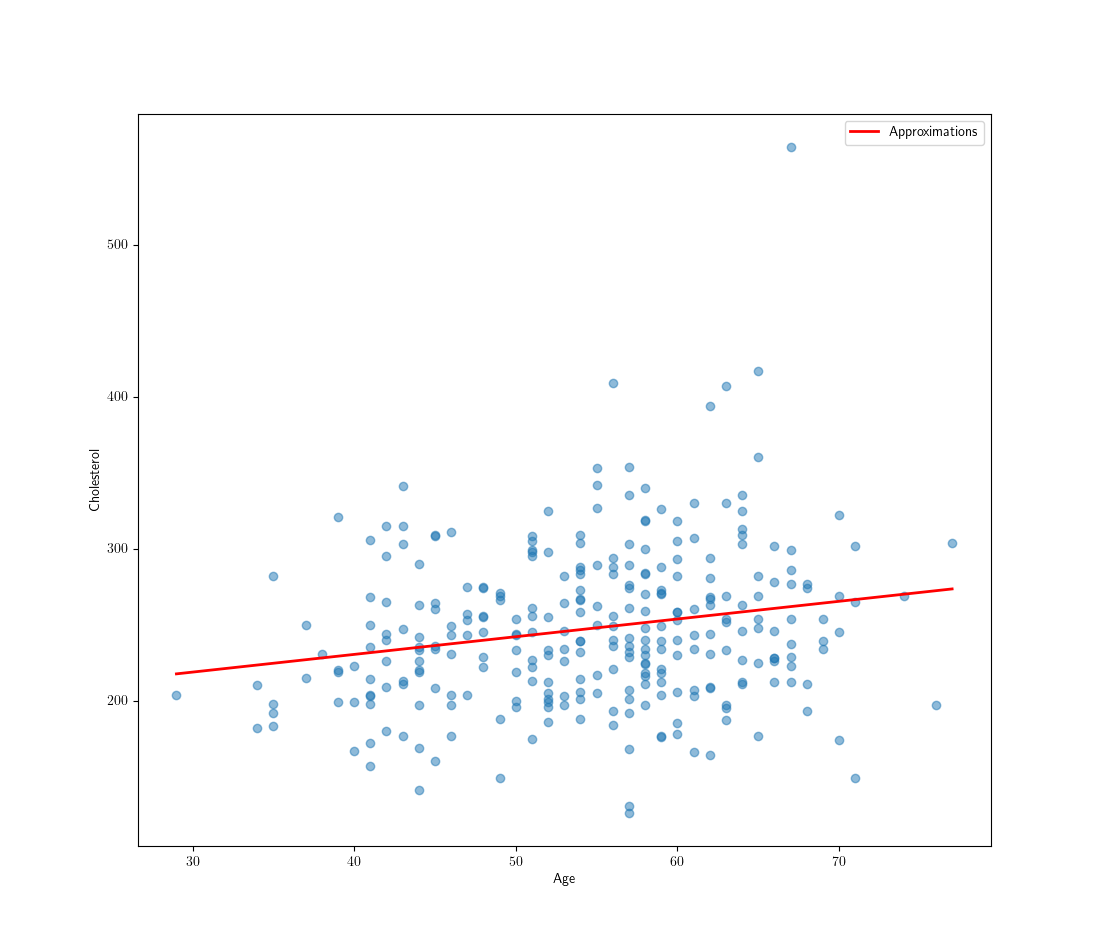

There it is the close form function to find all the weights, which is called the normal equation. Using age and cholesterol data points of patients in the Heart Disease UCI dataset we can use linear regression to see if there is any linear connection between age and cholesterol:

The red line shows the approximated best solution and and we can see there are some linear connection between age and cholesterol of patients and we can spot a trend in the data.

Some closing notes. In this article the close form function was used to find all the weights. The close form has its benefits, it yields an exact solution and is computational predictable (it can be computed in a finite number of steps is). However, it requires that the coefficient matrix multiplied by itself transposed (the Gram matrix) is invertible/non-singular, it can be prone to numerical errors if the Gram matrix is near singular (typically rounding errors - computers only allow a certain number of decimals to represent the number) and may be inefficient for very large matrices. To counter these limitations and disadvantages one could look into using numerical approaches instead like Newtons method or gradient descent.